- Organization and architecture.

- Computer organization: how parts are combined to make a working

whole.

- Physical aspects of the computer.

- Types memory and other components.

- Signals and control protocols.

- Computer architecture: how the organization is presented to the

programmer.

- Instruction sets and codes

- How memory is addressed

- How I/O is accomplished

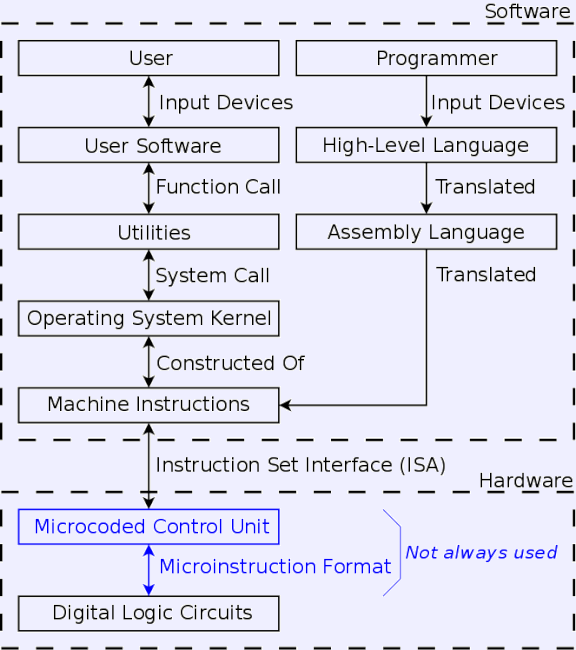

- Instruction Set Architecture (ISA): The interface between

software and hardware.

- Hardware and software

- Any program is executed by some interpreter.

- That interpreter may be software or hardware.

- Of course, you have to reach hardware eventually to have something

you can run.

- Could you build a JVM in hardware?

- Any task done by software could be done by hardware instead.

- Any task done by hardware could be done by software instead.

- Though the software still needs an interpreter, so you can apply the

rule recursively.

- Since recursion needs a base case, you'll have some hardware

eventually.

- Hardware solutions usually run faster.

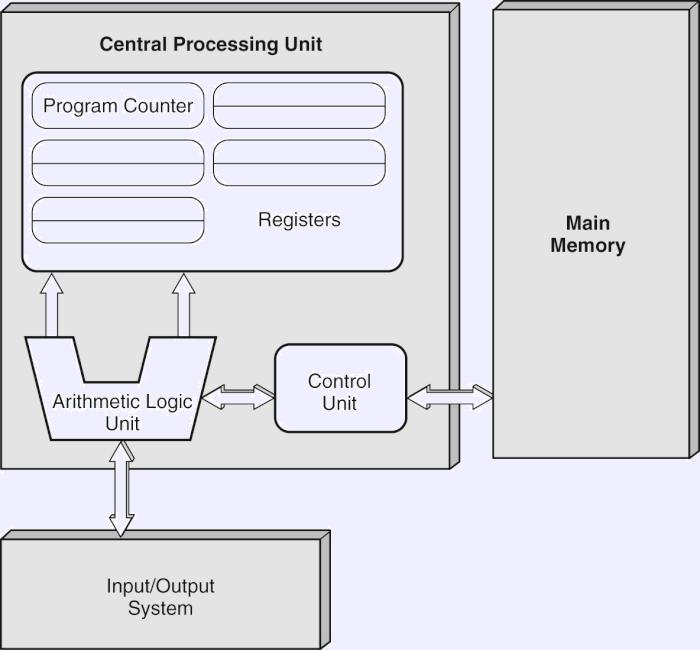

- A computer has

- Processor(s) to interpret and execute instructions

- Memory to store both data and programs.

- Mechanism to transfer data to and from the outside.

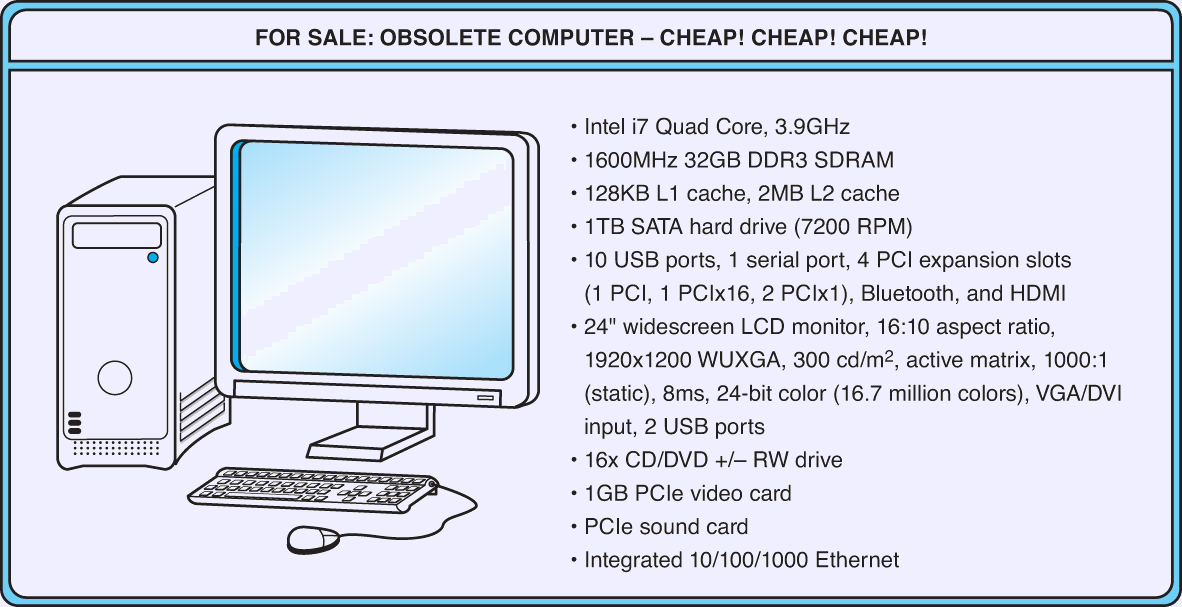

- Computer Guts.

- Sizes.

- Memory is always in binary: 1K = 1024, 1M = 10242, etc.

- Disks sizes

- Often in binary

- Formatting consumes a good bit of the hardware space.

- Processors.

- Typically multi-core these days: multiple CPUs on one chip.

- Clock speed in the GHz range.

- The clock is a circuit which generates a pulse at a regular rate.

- Makes the CPU do the next thing, whatever that is.

- Chapter 3.

- Chapters 4 and 5.

- Main Memory. Typically SDRAM.

- Synchronous Dynamic Random Access Memory.

- Chapter 6.

- System bus. Connects between CPU, Memory and some other parts.

Chapter 4.

- Cache memory.

- Holds a copy of a portion of the main memory.

- Operates must faster.

- Speeds up a large percentage of memory operations, since they can be

satisfied from cache.

- Chapter 6.

- Storage

- I/O bus speed.

- Moves data to and from I/O devices.

- Separate from the system bus.

- Hard drive: rotational speed matters.

- Solid state (flash) storage: Very different properties from a

hard drive.

- Optical

- Chapter 7.

- (Other) peripheral devices.

- Display

- Graphics processor. (Yes, it's an extra processor.)

- Network Interface Card (NIC). (Which usually isn't a separate

card anymore.)

- Standards Organizations. Standards allow various components to work

together, even when made by different manufacturers.

- Institute of Electrical and Electronics Engineers (IEEE). Buses,

low-level networking, many others.

- International Telecommunications Union (ITU).

- International Standards Organization (ISO).

- Coordinates national standards bodies.

- US is the American National Standards Institute (ANSI).

- Organizations specialized around specific standards.

- InterNational Committee for Information Technology Standards (INCITS).

Disk I/O bus standards, and some others.

- The USB Implementers Forum.

- Generations.

- Gen. 0. Mechanical computation.

- Abacus. Really a memory system.

- Pascal's calculator. Invented 1642.

Basic design still used in the 1950s.

- Babbage

- Difference engine. 1882. A calculator.

- Analytical engine. A computer.

- Punched cards.

- Early use: Jacquard Loom. Controlled patterns sewn into the cloth.

- Babbage used them to control his equipment.

- Hollerith cards.

- Created for 1890 census.

- Standard for computing into the (gak!) 1980's.

- Electro-mechanical: relays.

- Conrad Zuse, Germany, during WWII.

- Harvard Mark I (started 1939).

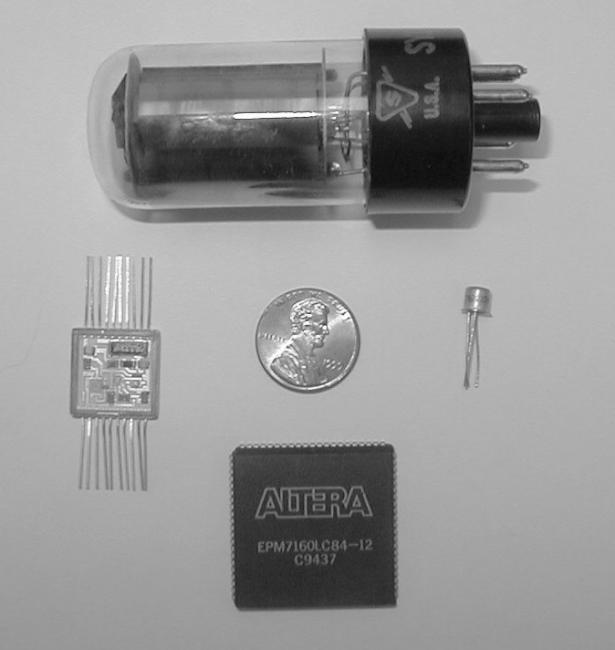

- Gen. 1. Vacuum tubes.

- Tubes must be heated. Use lots of power, leave lots of heat.

- Routinely burn out and must be replaced.

- Conrad Zuse again. Design only, though. Couldn't get the tubes.

- Atanasoff-Berry (ABC).

- Mauchly and Eckert, ENIAC.

- More than a lab toy.

- 17,468 vacuum tubes.

- Funded by the army to calculate ballistic tables.

- Gen. 2. Transistors.

- Bell Labs: Bardeen, Brattain, Schockley.

- Solid state (v. ionized) version of the vacuum tube, but

- Uses much less power.

- Generates much less heat.

- Doesn't burn out.

- Start of a large commercial computing industry.

- Gen. 3. Integrated circuits.

- Transistor circuits (including many transistors) built at once in a

single device.

- Dozens at first.

- Constantly increasing.

- Allowed small computers to be cheaper, expanding use beyond government

and the largest companies and universities.

- Allowed the largest computers to be larger. Rise of

“supercomputers.”

- The electric valve.

- Essentially a switch controlled by current on another circuit.

- Relays, vacuum tubes, and transistors are all

such controlled switches.

- Gen. 4. Very-large-scale integrated circuits.

- Chips in excess of 10,000 components.

- Or millions. Or billions.

- Particularly, a complete CPU.

- Allows the creation of the PC, and later portable computing.

- Moore's Law.

- The density of silicon chips doubles every 18 months.

- Not some law of nature; will hold as long as engineers keep

figuring out how make it hold.

- Originally a prediction for 10 years out. Has held for 40.

- Layered Design.

- Think the figure in the book mixes some different things.

- Machine instructions are bit patterns stored in the computer's

memory.

- The Instruction Set Interface (ISA) describes the format and

meaning of the instructions.

- The ISA is the interface between software and hardware.

- Assembly language is a symbolic form of machine instructions.

- Von Neumann Architecture

- Stored program: Program stored in memory along with the data.

- Sequential processing of instructions.

- Single path between CPU and memory: The Von Neumann Bottleneck.

- Fetch-execute cycle.

- CPU fetches the instruction denoted by the PC.

- CPU decodes the fetched instruction.

- Any needed operands are fetched.

- The operation is performed, and any results are stored.

- Some parallel systems discard Von Neumann; common ones just have several.

- Clustering.

- Multicomputers.

- Multi-core computers.

- What's not Von Neumann?

- Things like multiple buses, or a small number of

multiple memories, that aren't very different.

- Send the operation to the data, not the other way 'round.

- Instructions applied in parallel to a set of data.

- Adding computing power to memory, including hardware neural networks.