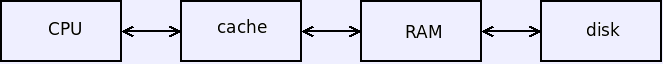

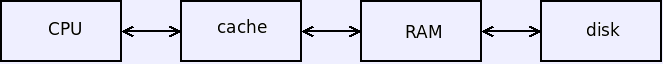

- Memory hierarchy.

- Smaller, faster on left, larger, cheaper on right.

- Keep recent data in faster storage.

- Create a large, cheap storage system.

- Address Mapping

- Programs refer to memory locations by address.

MOV eax, [ebx]

- The CPU uses addresses to refer to locations in the memory system.

- Only in the simplest systems are these actually the same:

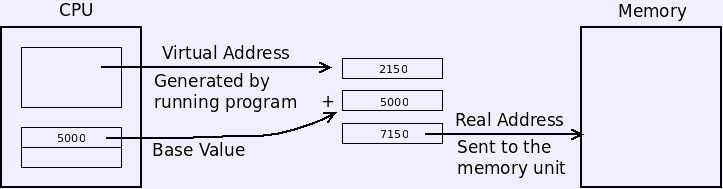

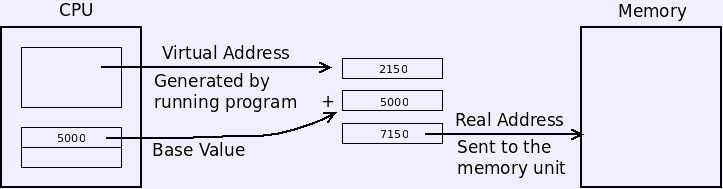

Addresses generated by the program are usually translated by the

CPU before being sent to the memory unit.

- Addresses used by the hardware are real addresses

- Addresses used by the software are virtual addresses

- Each time the program does a store or fetch, the virtual address

is translated to a real address.

- User mode code usually has no way to know what real addresses

exist or how any virtual ones translate.

- The relocation problem.

- For multiprogramming, multiple programs must be loaded into

memory at the same time.

- They will need to occupy different locations on different

occasions.

- Since this location will not be known at compile time, so it must

be possible to relocate a program after compilation.

- Software Relocation.

- Compiler generates object program starting at zero.

- Object format must distinguish pointer values (relative symbols)

from others (absolute symbols).

- When the program is copied from disk to memory, the software

adds its memory location to each relative symbol.

- All addresses are now adjusted and the program can run wherever

it is located.

- Static relocation

- Hardware: Base and Limit Registers

- Compiler (still) generates object program starting at zero.

- On each memory reference, the hardware adds the base address

to the address generated by the instruction.

- User programs are written or compiled to load at address zero.

- The user program has no way to generate addresses which are not

adjusted by the hardware.

- Usually, a limit register is included to trap reference which are

beyond a specified region.

- The user program generally has no access to the base and limit

registers, so it cannot change them or even

tell what its region is.

- Dynamic relocation

- The set of usable memory addresses is an address space.

- Swapping

- If memory is over-committed, some process is removed.

- The image is copied to disk.

- It is returned later after some other process(es) have exited.

- Without hardware relocation, it must be returned to the exact

same location.

- The loaded program doesn't have any markings for absolute or

relative symbols.

- New values have been computed, so the original file won't help.

- Partition Management. The region of memory

occupied by a running program is called a

partition.

- Fixed partitions. Largely obsolete.

- Memory is divided at boot time, and new jobs are placed where they

will fit.

- Waste when jobs are smaller than any available partitions.

- Delay or waste dilemma if the only available partition is too big.

- The waste from this un-needed space is called internal

fragmentation.

- Variable partitions.

- Partitions are created

or destroyed as jobs have need.

- Free partitions can be

kept in a linked list.

- Simply link free blocks together into a list.

- The empty partitions themselves are the nodes, so the space

is “free”.

- Can be expensive to search.

- Finding adjacent free blocks can be expensive.

- Free partitions can be

kept in a map.

- Still requires linear search, though using offsets.

- Space is not free.

- Main advantage: easy to find adjacent groups of free blocks.

- External Fragmentation.

- System tends to accumulate empty

slots too small to use.

- When a new partition is needed, the chance that it fits any

hole exactly is very small.

- If it's a little too big, we put it elsewhere (or can't store

it at all).

- If it's a bit smaller, we create a useless fragment.

- If it's small enough to leave a useful partition, we'll just

create the fragment next time around.

- Compacting is possible (with hardware relocation), but

expensive.

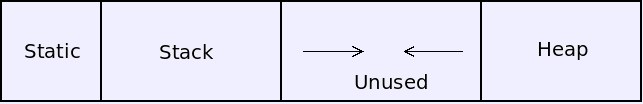

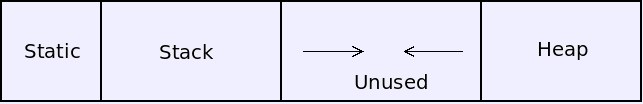

- Internal organization.

- A partition may have extra space to allow for growth.

- Typical to allow the heap and stack to grow together, so we don't have

to predict which will grow more.

- Some architectures will allocate separate partitions for stack and

heap, even though they belong to the same process.